Packaging Guide

This guide is intended for developers or administrators who want to package software so that Spack can install it. It assumes that you have at least some familiarity with Python, and that you’ve read the basic usage guide, especially the part about specs.

There are two key parts of Spack:

Specs: expressions for describing builds of software, and

Packages: Python modules that describe how to build and test software according to a spec.

Specs allow a user to describe a particular build in a way that a package author can understand. Packages allow the packager to encapsulate the build logic for different versions, compilers, options, platforms, and dependency combinations in one place. Essentially, a package translates a spec into build logic. It also allows the packager to write spec-specific tests of the installed software.

Packages in Spack are written in pure Python, so you can do anything in Spack that you can do in Python. Python was chosen as the implementation language for two reasons. First, Python is becoming ubiquitous in the scientific software community. Second, it’s a modern language and has many powerful features to help make package writing easy.

Warning

As a general rule, packages should install the software from source. The only exception is for proprietary software (e.g., vendor compilers).

If a special build system needs to be added in order to support building a package from source, then the associated code and recipe should be added first.

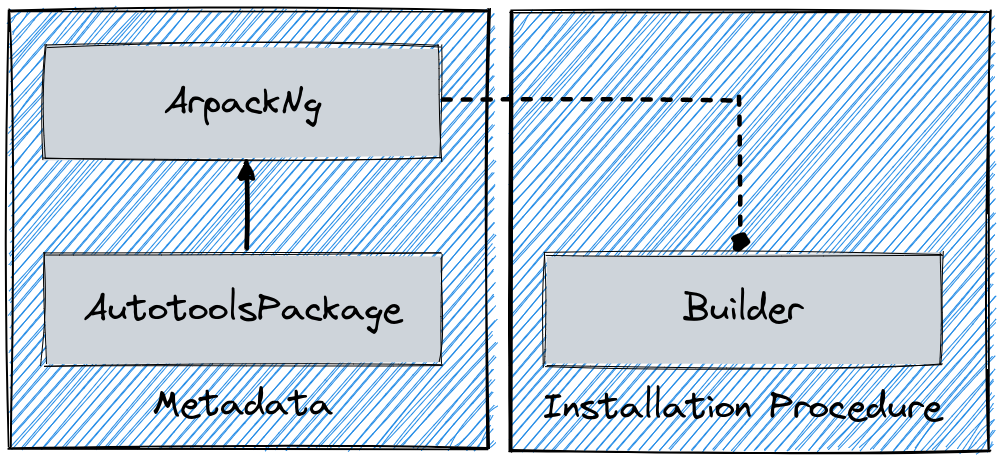

Overview of the installation procedure

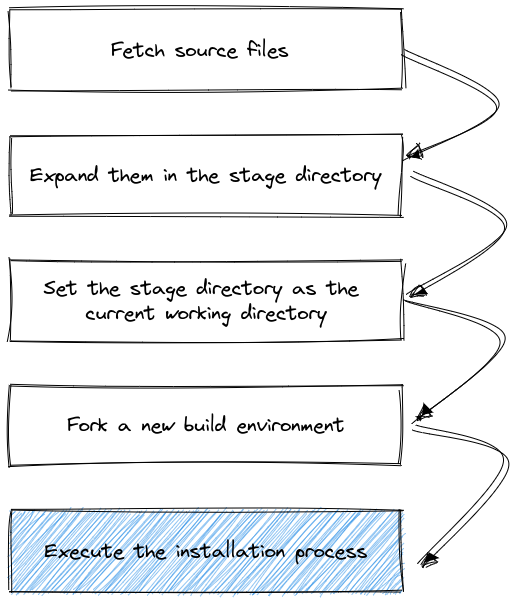

Whenever Spack installs software, it goes through a series of predefined steps:

All these steps are influenced by the metadata in each package.py and

by the current Spack configuration.

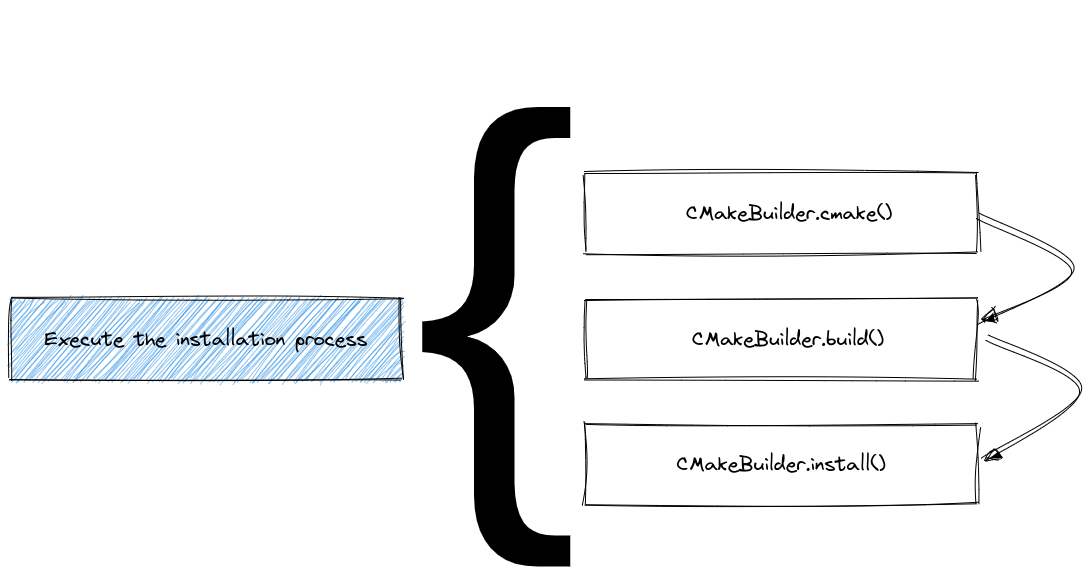

Since build systems are different from one another, the execution of the

last block in the figure is further expanded in a build system specific way.

An example for CMake is, for instance:

The predefined steps for each build system are called “phases”.

In general, the name and order in which the phases will be executed can be

obtained by either reading the API docs at build_systems, or

using the spack info command:

$ spack info --phases m4

AutotoolsPackage: m4

Homepage: https://www.gnu.org/software/m4/m4.html

Safe versions:

1.4.17 ftp://ftp.gnu.org/gnu/m4/m4-1.4.17.tar.gz

Variants:

Name Default Description

sigsegv on Build the libsigsegv dependency

Installation Phases:

autoreconf configure build install

Build Dependencies:

libsigsegv

...

An extensive list of available build systems and phases is provided in Overriding build system defaults.

Writing a package recipe

Since v0.19, Spack supports two ways of writing a package recipe. The most commonly used is to encode both the metadata (directives, etc.) and the build behavior in a single class, like shown in the following example:

class Openjpeg(CMakePackage):

"""OpenJPEG is an open-source JPEG 2000 codec written in C language"""

homepage = "https://github.com/uclouvain/openjpeg"

url = "https://github.com/uclouvain/openjpeg/archive/v2.3.1.tar.gz"

version("2.4.0", sha256="8702ba68b442657f11aaeb2b338443ca8d5fb95b0d845757968a7be31ef7f16d")

variant("codec", default=False, description="Build the CODEC executables")

depends_on("libpng", when="+codec")

def url_for_version(self, version):

if version >= Version("2.1.1"):

return super().url_for_version(version)

url_fmt = "https://github.com/uclouvain/openjpeg/archive/version.{0}.tar.gz"

return url_fmt.format(version)

def cmake_args(self):

args = [

self.define_from_variant("BUILD_CODEC", "codec"),

self.define("BUILD_MJ2", False),

self.define("BUILD_THIRDPARTY", False),

]

return args

A package encoded with a single class is backward compatible with versions of Spack lower than v0.19, and so are custom repositories containing only recipes of this kind. The downside is that this format doesn’t allow packagers to use more than one build system in a single recipe.

To do that, we have to resort to the second way Spack has of writing packages, which involves writing a builder class explicitly. Using the same example as above, this reads:

class Openjpeg(CMakePackage):

"""OpenJPEG is an open-source JPEG 2000 codec written in C language"""

homepage = "https://github.com/uclouvain/openjpeg"

url = "https://github.com/uclouvain/openjpeg/archive/v2.3.1.tar.gz"

version("2.4.0", sha256="8702ba68b442657f11aaeb2b338443ca8d5fb95b0d845757968a7be31ef7f16d")

variant("codec", default=False, description="Build the CODEC executables")

depends_on("libpng", when="+codec")

def url_for_version(self, version):

if version >= Version("2.1.1"):

return super().url_for_version(version)

url_fmt = "https://github.com/uclouvain/openjpeg/archive/version.{0}.tar.gz"

return url_fmt.format(version)

class CMakeBuilder(spack.build_systems.cmake.CMakeBuilder):

def cmake_args(self):

args = [

self.define_from_variant("BUILD_CODEC", "codec"),

self.define("BUILD_MJ2", False),

self.define("BUILD_THIRDPARTY", False),

]

return args

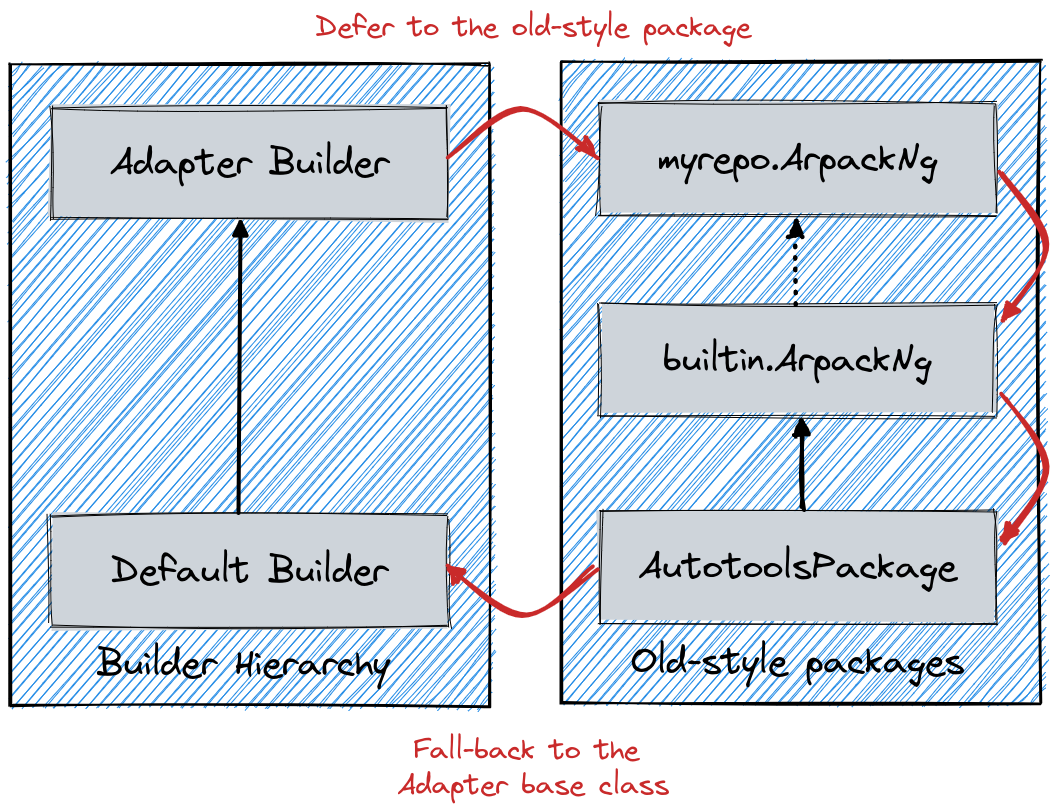

This way of writing packages allows extending the recipe to support multiple build systems, see Multiple build systems for more details. The downside is that recipes of this kind are only understood by Spack since v0.19+. More information on the internal architecture of Spack can be found at Package class architecture.

Note

If a builder is implemented in package.py, all build-specific methods must be moved

to the builder. This means that if you have a package like

class Foo(CmakePackage):

def cmake_args(self):

...

and you add a builder to the package.py, you must move cmake_args to the builder.

Creating new packages

To help creating a new package Spack provides a command that generates a package.py

file in an existing repository, with a boilerplate package template. Here’s an example:

$ spack create https://gmplib.org/download/gmp/gmp-6.1.2.tar.bz2

Spack examines the tarball URL and tries to figure out the name of the package to be created. If the name contains uppercase letters, these are automatically converted to lowercase. If the name contains underscores or periods, these are automatically converted to dashes.

Spack also searches for additional versions located in the same directory of the website. Spack prompts you to tell you how many versions it found and asks you how many you would like to download and checksum:

$ spack create https://gmplib.org/download/gmp/gmp-6.1.2.tar.bz2

==> This looks like a URL for gmp

==> Found 16 versions of gmp:

6.1.2 https://gmplib.org/download/gmp/gmp-6.1.2.tar.bz2

6.1.1 https://gmplib.org/download/gmp/gmp-6.1.1.tar.bz2

6.1.0 https://gmplib.org/download/gmp/gmp-6.1.0.tar.bz2

...

5.0.0 https://gmplib.org/download/gmp/gmp-5.0.0.tar.bz2

How many would you like to checksum? (default is 1, q to abort)

Spack will automatically download the number of tarballs you specify (starting with the most recent) and checksum each of them.

You do not have to download all of the versions up front. You can always choose to download just one tarball initially, and run spack checksum later if you need more versions.

Spack automatically creates a directory in the appropriate repository,

generates a boilerplate template for your package, and opens up the new

package.py in your favorite $EDITOR (see Controlling the editor

for details):

1# Copyright 2013-2024 Lawrence Livermore National Security, LLC and other

2# Spack Project Developers. See the top-level COPYRIGHT file for details.

3#

4# SPDX-License-Identifier: (Apache-2.0 OR MIT)

5

6# ----------------------------------------------------------------------------

7# If you submit this package back to Spack as a pull request,

8# please first remove this boilerplate and all FIXME comments.

9#

10# This is a template package file for Spack. We've put "FIXME"

11# next to all the things you'll want to change. Once you've handled

12# them, you can save this file and test your package like this:

13#

14# spack install gmp

15#

16# You can edit this file again by typing:

17#

18# spack edit gmp

19#

20# See the Spack documentation for more information on packaging.

21# ----------------------------------------------------------------------------

22import spack.build_systems.autotools

23from spack.package import *

24

25

26class Gmp(AutotoolsPackage):

27 """FIXME: Put a proper description of your package here."""

28

29 # FIXME: Add a proper url for your package's homepage here.

30 homepage = "https://www.example.com"

31 url = "https://gmplib.org/download/gmp/gmp-6.1.2.tar.bz2"

32

33 # FIXME: Add a list of GitHub accounts to

34 # notify when the package is updated.

35 # maintainers("github_user1", "github_user2")

36

37 version("6.2.1", sha256="eae9326beb4158c386e39a356818031bd28f3124cf915f8c5b1dc4c7a36b4d7c")

38

39 # FIXME: Add dependencies if required.

40 # depends_on("foo")

41

42 def configure_args(self):

43 # FIXME: Add arguments other than --prefix

44 # FIXME: If not needed delete the function

45 args = []

46 return args

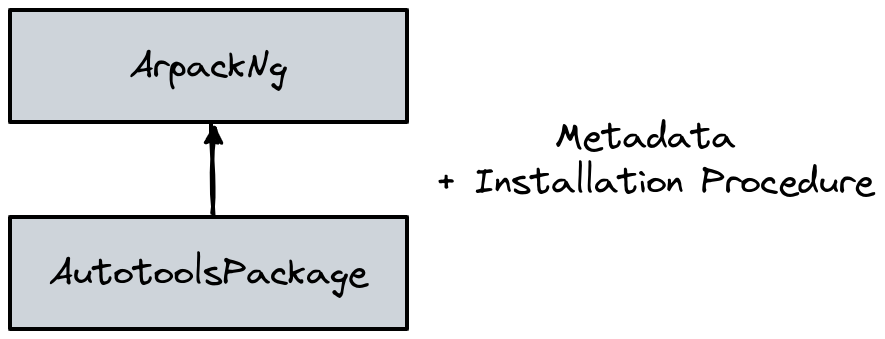

The tedious stuff (creating the class, checksumming archives) has been

done for you. Spack correctly detected that gmp uses the autotools

build system, so it created a new Gmp package that subclasses the

AutotoolsPackage base class.

The default installation procedure for a package subclassing the AutotoolsPackage

is to go through the typical process of:

./configure --prefix=/path/to/installation/directory

make

make check

make install

For most Autotools packages, this is sufficient. If you need to add

additional arguments to the ./configure call, add them via the

configure_args function.

In the generated package, the download url attribute is already

set. All the things you still need to change are marked with

FIXME labels. You can delete the commented instructions between

the license and the first import statement after reading them.

The rest of the tasks you need to do are as follows:

Add a description.

Immediately inside the package class is a docstring in triple-quotes (

"""). It is used to generate the description shown when users runspack info.Change the

homepageto a useful URL.The

homepageis displayed when users runspack infoso that they can learn more about your package.Add a comma-separated list of maintainers.

Add a list of Github accounts of people who want to be notified any time the package is modified. See Maintainers.

Add

depends_on()calls for the package’s dependencies.depends_ontells Spack that other packages need to be built and installed before this one. See Dependencies.Get the installation working.

Your new package may require specific flags during

configure. These can be added viaconfigure_args. Specifics will differ depending on the package and its build system. Overriding build system defaults is covered in detail later.

Controlling the editor

When Spack needs to open an editor for you (e.g., for commands like Creating new packages or Editing existing packages, it looks at several environment variables to figure out what to use. The order of precedence is:

SPACK_EDITOR: highest precedence, in case you want something specific for Spack;VISUAL: standard environment variable for full-screen editors likevimoremacs;EDITOR: older environment variable for your editor.

You can set any of these to the command you want to run, e.g., in bash you might run

one of these:

export VISUAL=vim

export EDITOR="emacs -nw"

export SPACK_EDITOR=nano

If Spack finds none of these variables set, it will look for vim, vi, emacs,

nano, and notepad, in that order.

Bundling software

If you have a collection of software expected to work well together with no source code of its own, you can create a BundlePackage. Examples where bundle packages can be useful include defining suites of applications (e.g, EcpProxyApps), commonly used libraries (e.g., AmdAocl), and software development kits (e.g., EcpDataVisSdk).

These versioned packages primarily consist of dependencies on the associated software packages. They can include variants to ensure common build options are consistently applied to dependencies. Known build failures, such as not building on a platform or when certain compilers or variants are used, can be flagged with conflicts. Build requirements, such as only building with specific compilers, can similarly be flagged with requires.

The spack create --template bundle command will create a skeleton

BundlePackage package.py for you:

$ spack create --template bundle --name coolsdk

Now you can fill in the basic package documentation, version(s), and software package dependencies along with any other relevant customizations.

Note

Remember that bundle packages have no software of their own so there is nothing to download.

Non-downloadable software

If your software cannot be downloaded from a URL you can still create a boilerplate

package.py by telling spack create what name you want to use:

$ spack create --name intel

This will create a simple intel package with an install()

method that you can craft to install your package.

Likewise, you can force the build system to be used with --template and,

in case it’s needed, you can overwrite a package already in the repository

with --force:

$ spack create --name gmp https://gmplib.org/download/gmp/gmp-6.1.2.tar.bz2

$ spack create --force --template autotools https://gmplib.org/download/gmp/gmp-6.1.2.tar.bz2

A complete list of available build system templates can be found by running

spack create --help.

Editing existing packages

One of the easiest ways to learn how to write packages is to look at

existing ones. You can edit a package file by name with the spack

edit command:

$ spack edit gmp

If you used spack create to create a package, you can get back to

it later with spack edit. For instance, the gmp package actually

lives in:

$ spack location -p gmp

${SPACK_ROOT}/var/spack/repos/builtin/packages/gmp/package.py

but spack edit provides a much simpler shortcut and saves you the

trouble of typing the full path.

Naming & directory structure

This section describes how packages need to be named, and where they live in Spack’s directory structure. In general, Creating new packages handles creating package files for you, so you can skip most of the details here.

var/spack/repos/builtin/packages

A Spack installation directory is structured like a standard UNIX

install prefix (bin, lib, include, var, opt,

etc.). Most of the code for Spack lives in $SPACK_ROOT/lib/spack.

Packages themselves live in $SPACK_ROOT/var/spack/repos/builtin/packages.

If you cd to that directory, you will see directories for each

package:

$ cd $SPACK_ROOT/var/spack/repos/builtin/packages && ls

3dtk

3proxy

7zip

abacus

abduco

abi-compliance-checker

abi-dumper

abinit

abseil-cpp

abyss

...

Each directory contains a file called package.py, which is where

all the python code for the package goes. For example, the libelf

package lives in:

$SPACK_ROOT/var/spack/repos/builtin/packages/libelf/package.py

Alongside the package.py file, a package may contain extra

directories or files (like patches) that it needs to build.

Package Names

Packages are named after the directory containing package.py. So,

libelf’s package.py lives in a directory called libelf.

The package.py file defines a class called Libelf, which

extends Spack’s Package class. For example, here is

$SPACK_ROOT/var/spack/repos/builtin/packages/libelf/package.py:

1from spack import *

2

3class Libelf(Package):

4 """ ... description ... """

5 homepage = ...

6 url = ...

7 version(...)

8 depends_on(...)

9

10 def install():

11 ...

The directory name (libelf) determines the package name that

users should provide on the command line. e.g., if you type any of

these:

$ spack info libelf

$ spack versions libelf

$ spack install libelf@0.8.13

Spack sees the package name in the spec and looks for

libelf/package.py in var/spack/repos/builtin/packages.

Likewise, if you run spack install py-numpy, Spack looks for

py-numpy/package.py.

Spack uses the directory name as the package name in order to give

packagers more freedom in naming their packages. Package names can

contain letters, numbers, and dashes. Using a Python identifier

(e.g., a class name or a module name) would make it difficult to

support these options. So, you can name a package 3proxy or

foo-bar and Spack won’t care. It just needs to see that name

in the packages directory.

Package class names

Spack loads package.py files dynamically, and it needs to find a

special class name in the file for the load to succeed. The class

name (Libelf in our example) is formed by converting words

separated by - in the file name to CamelCase. If the name

starts with a number, we prefix the class name with _. Here are

some examples:

Module Name |

Class Name |

|---|---|

|

|

|

|

In general, you won’t have to remember this naming convention because Creating new packages and Editing existing packages handle the details for you.

Maintainers

Each package in Spack may have one or more maintainers, i.e. one or more GitHub accounts of people who want to be notified any time the package is modified.

When a pull request is submitted that updates the package, these people will be requested to review the PR. This is useful for developers who maintain a Spack package for their own software, as well as users who rely on a piece of software and want to ensure that the package doesn’t break. It also gives users a list of people to contact for help when someone reports a build error with the package.

To add maintainers to a package, simply declare them with the maintainers directive:

maintainers("user1", "user2")

The list of maintainers is additive, and includes all the accounts eventually declared in base classes.

Trusted Downloads

Spack verifies that the source code it downloads is not corrupted or compromised; or at least, that it is the same version the author of the Spack package saw when the package was created. If Spack uses a download method it can verify, we say the download method is trusted. Trust is important for all downloads: Spack has no control over the security of the various sites from which it downloads source code, and can never assume that any particular site hasn’t been compromised.

Trust is established in different ways for different download methods.

For the most common download method — a single-file tarball — the

tarball is checksummed. Git downloads using commit= are trusted

implicitly, as long as a hash is specified.

Spack also provides untrusted download methods: tarball URLs may be supplied without a checksum, or Git downloads may specify a branch or tag instead of a hash. If the user does not control or trust the source of an untrusted download, it is a security risk. Unless otherwise specified by the user for special cases, Spack should by default use only trusted download methods.

Unfortunately, Spack does not currently provide that guarantee. It does provide the following mechanisms for safety:

By default, Spack will only install a tarball package if it has a checksum and that checksum matches. You can override this with

spack install --no-checksum.Numeric versions are almost always tarball downloads, whereas non-numeric versions not named

developfrequently download untrusted branches or tags from a version control system. As long as a package has at least one numeric version, and no non-numeric version nameddevelop, Spack will prefer it over any non-numeric versions.

Checksums

For tarball downloads, Spack can currently support checksums using the MD5, SHA-1, SHA-224, SHA-256, SHA-384, and SHA-512 algorithms. It determines the algorithm to use based on the hash length.

Versions and fetching

The most straightforward way to add new versions to your package is to add a line like this in the package class:

class Foo(Package):

url = "http://example.com/foo-1.0.tar.gz"

version("8.2.1", md5="4136d7b4c04df68b686570afa26988ac")

version("8.2.0", md5="1c9f62f0778697a09d36121ead88e08e")

version("8.1.2", md5="d47dd09ed7ae6e7fd6f9a816d7f5fdf6")

Note

By convention, we list versions in descending order, from newest to oldest.

Note

Bundle packages do not have source code so

there is nothing to fetch. Consequently, their version directives

consist solely of the version name (e.g., version("202309")).

Date Versions

If you wish to use dates as versions, it is best to use the format

@yyyy-mm-dd. This will ensure they sort in the correct order.

Alternately, you might use a hybrid release-version / date scheme.

For example, @1.3_2016-08-31 would mean the version from the

1.3 branch, as of August 31, 2016.

Version URLs

By default, each version’s URL is extrapolated from the url field

in the package. For example, Spack is smart enough to download

version 8.2.1 of the Foo package above from

http://example.com/foo-8.2.1.tar.gz.

If the URL is particularly complicated or changes based on the release,

you can override the default URL generation algorithm by defining your

own url_for_version() function. For example, the download URL for

OpenMPI contains the major.minor version in one spot and the

major.minor.patch version in another:

https://www.open-mpi.org/software/ompi/v2.1/downloads/openmpi-2.1.1.tar.bz2

In order to handle this, you can define a url_for_version() function

like so:

def url_for_version(self, version):

url = "https://download.open-mpi.org/release/open-mpi/v{0}/openmpi-{1}.tar.bz2"

return url.format(version.up_to(2), version)

With the use of this url_for_version(), Spack knows to download OpenMPI 2.1.1

from http://www.open-mpi.org/software/ompi/v2.1/downloads/openmpi-2.1.1.tar.bz2

but download OpenMPI 1.10.7 from http://www.open-mpi.org/software/ompi/v1.10/downloads/openmpi-1.10.7.tar.bz2.

You’ll notice that OpenMPI’s url_for_version() function makes use of a special

Version function called up_to(). When you call version.up_to(2) on a

version like 1.10.0, it returns 1.10. version.up_to(1) would return

1. This can be very useful for packages that place all X.Y.* versions in

a single directory and then places all X.Y.Z versions in a sub-directory.

There are a few Version properties you should be aware of. We generally

prefer numeric versions to be separated by dots for uniformity, but not all

tarballs are named that way. For example, icu4c separates its major and minor

versions with underscores, like icu4c-57_1-src.tgz. The value 57_1 can be

obtained with the use of the version.underscored property. Note that Python

properties don’t need parentheses. There are other separator properties as well:

Property |

Result |

|---|---|

version.dotted |

1.2.3 |

version.dashed |

1-2-3 |

version.underscored |

1_2_3 |

version.joined |

123 |

Note

Python properties don’t need parentheses. version.dashed is correct.

version.dashed() is incorrect.

In addition, these version properties can be combined with up_to().

For example:

>>> version = Version("1.2.3")

>>> version.up_to(2).dashed

Version("1-2")

>>> version.underscored.up_to(2)

Version("1_2")

As you can see, order is not important. Just keep in mind that up_to() and

the other version properties return Version objects, not strings.

If a URL cannot be derived systematically, or there is a special URL for one of its versions, you can add an explicit URL for a particular version:

version("8.2.1", md5="4136d7b4c04df68b686570afa26988ac",

url="http://example.com/foo-8.2.1-special-version.tar.gz")

When you supply a custom URL for a version, Spack uses that URL verbatim and does not perform extrapolation. The order of precedence of these methods is:

package-level

urlurl_for_version()version-specific

url

so if your package contains a url_for_version(), it can be overridden

by a version-specific url.

If your package does not contain a package-level url or url_for_version(),

Spack can determine which URL to download from even if only some of the versions

specify their own url. Spack will use the nearest URL before the requested

version. This is useful for packages that have an easy to extrapolate URL, but

keep changing their URL format every few releases. With this method, you only

need to specify the url when the URL changes.

Mirrors of the main URL

Spack supports listing mirrors of the main URL in a package by defining

the urls attribute:

class Foo(Package):

urls = [

"http://example.com/foo-1.0.tar.gz",

"http://mirror.com/foo-1.0.tar.gz"

]

instead of just a single url. This attribute is a list of possible URLs that

will be tried in order when fetching packages. Notice that either one of url

or urls can be present in a package, but not both at the same time.

A well-known case of packages that can be fetched from multiple mirrors is that

of GNU. For that, Spack goes a step further and defines a mixin class that

takes care of all of the plumbing and requires packagers to just define a proper

gnu_mirror_path attribute:

class Autoconf(AutotoolsPackage, GNUMirrorPackage):

"""Autoconf -- system configuration part of autotools"""

homepage = "https://www.gnu.org/software/autoconf/"

gnu_mirror_path = "autoconf/autoconf-2.69.tar.gz"

license("GPL-3.0-or-later WITH Autoconf-exception-3.0", when="@2.62:", checked_by="tgamblin")

license("GPL-2.0-or-later WITH Autoconf-exception-2.0", when="@:2.59", checked_by="tgamblin")

Skipping the expand step

Spack normally expands archives (e.g. *.tar.gz and *.zip) automatically

into a standard stage source directory (self.stage.source_path) after

downloading them. If you want to skip this step (e.g., for self-extracting

executables and other custom archive types), you can add expand=False to a

version directive.

version("8.2.1", md5="4136d7b4c04df68b686570afa26988ac",

url="http://example.com/foo-8.2.1-special-version.sh", expand=False)

When expand is set to False, Spack sets the current working

directory to the directory containing the downloaded archive before it

calls your install method. Within install, the path to the

downloaded archive is available as self.stage.archive_file.

Here is an example snippet for packages distributed as self-extracting archives. The example sets permissions on the downloaded file to make it executable, then runs it with some arguments.

def install(self, spec, prefix):

set_executable(self.stage.archive_file)

installer = Executable(self.stage.archive_file)

installer("--prefix=%s" % prefix, "arg1", "arg2", "etc.")

Deprecating old versions

There are many reasons to remove old versions of software:

Security vulnerabilities (most serious reason)

Changing build systems that increase package complexity

Changing dependencies/patches/resources/flags that increase package complexity

Maintainer/developer inability/unwillingness to support old versions

No longer available for download (right to be forgotten)

Package or version rename

At the same time, there are many reasons to keep old versions of software:

Reproducibility

Requirements for older packages (e.g. some packages still rely on Qt 3)

In general, you should not remove old versions from a package.py. Instead,

you should first deprecate them using the following syntax:

version("1.2.3", sha256="...", deprecated=True)

This has two effects. First, spack info will no longer advertise that

version. Second, commands like spack install that fetch the package will

require user approval:

$ spack install openssl@1.0.1e

==> Warning: openssl@1.0.1e is deprecated and may be removed in a future Spack release.

==> Fetch anyway? [y/N]

If you use spack install --deprecated, this check can be skipped.

This also applies to package recipes that are renamed or removed. You should first deprecate all versions before removing a package. If you need to rename it, you can deprecate the old package and create a new package at the same time.

Version deprecations should always last at least one Spack minor release cycle

before the version is completely removed. For example, if a version is

deprecated in Spack 0.16.0, it should not be removed until Spack 0.17.0. No

version should be removed without such a deprecation process. This gives users

a chance to complain about the deprecation in case the old version is needed

for some application. If you require a deprecated version of a package, simply

submit a PR to remove deprecated=True from the package. However, you may be

asked to help maintain this version of the package if the current maintainers

are unwilling to support this older version.

Download caching

Spack maintains a cache (described here) which saves files

retrieved during package installations to avoid re-downloading in the case that

a package is installed with a different specification (but the same version) or

reinstalled on account of a change in the hashing scheme. It may (rarely) be

necessary to avoid caching for a particular version by adding no_cache=True

as an option to the version() directive. Example situations would be a

“snapshot”-like Version Control System (VCS) tag, a VCS branch such as

v6-16-00-patches, or a URL specifying a regularly updated snapshot tarball.

Version comparison

Spack imposes a generic total ordering on the set of versions, independently from the package they are associated with.

Most Spack versions are numeric, a tuple of integers; for example,

0.1, 6.96 or 1.2.3.1. In this very basic case, version

comparison is lexicographical on the numeric components:

1.2 < 1.2.1 < 1.2.2 < 1.10.

Spack can also supports string components such as 1.1.1a and

1.y.0. String components are considered less than numeric

components, so 1.y.0 < 1.0. This is for consistency with

RPM. String

components do not have to be separated by dots or any other delimiter.

So, the contrived version 1y0 is identical to 1.y.0.

Pre-release suffixes also contain string parts, but they are handled

in a special way. For example 1.2.3alpha1 is parsed as a pre-release

of the version 1.2.3. This allows Spack to order it before the

actual release: 1.2.3alpha1 < 1.2.3. Spack supports alpha, beta and

release candidate suffixes: 1.2alpha1 < 1.2beta1 < 1.2rc1 < 1.2. Any

suffix not recognized as a pre-release is treated as an ordinary

string component, so 1.2 < 1.2-mysuffix.

Finally, there are a few special string components that are considered

“infinity versions”. They include develop, main, master,

head, trunk, and stable. For example: 1.2 < develop.

These are useful for specifying the most recent development version of

a package (often a moving target like a git branch), without assigning

a specific version number. Infinity versions are not automatically used when determining the latest version of a package unless explicitly required by another package or user.

More formally, the order on versions is defined as follows. A version

string is split into a list of components based on delimiters such as

. and - and string boundaries. The components are split into

the release and a possible pre-release (if the last component

is numeric and the second to last is a string alpha, beta or rc).

The release components are ordered lexicographically, with comparsion

between different types of components as follows:

The following special strings are considered larger than any other numeric or non-numeric version component, and satisfy the following order between themselves:

develop > main > master > head > trunk > stable.Numbers are ordered numerically, are less than special strings, and larger than other non-numeric components.

All other non-numeric components are less than numeric components, and are ordered alphabetically.

Finally, if the release components are equal, the pre-release components are used to break the tie, in the obvious way.

The logic behind this sort order is two-fold:

Non-numeric versions are usually used for special cases while developing or debugging a piece of software. Keeping most of them less than numeric versions ensures that Spack chooses numeric versions by default whenever possible.

The most-recent development version of a package will usually be newer than any released numeric versions. This allows the

@developversion to satisfy dependencies likedepends_on(abc, when="@x.y.z:")

Version selection

When concretizing, many versions might match a user-supplied spec.

For example, the spec python matches all available versions of the

package python. Similarly, python@3: matches all versions of

Python 3 and above. Given a set of versions that match a spec, Spack

concretization uses the following priorities to decide which one to

use:

If the user provided a list of versions in

packages.yaml, the first matching version in that list will be used.If one or more versions is specified as

preferred=True, in eitherpackages.yamlorpackage.py, the largest matching version will be used. (“Latest” is defined by the sort order above).If no preferences in particular are specified in the package or in

packages.yaml, then the largest matching non-develop version will be used. By avoiding@develop, this prevents users from accidentally installing a@developversion.If all else fails and

@developis the only matching version, it will be used.

Ranges versus specific versions

When specifying versions in Spack using the pkg@<specifier> syntax,

you can use either ranges or specific versions. It is generally

recommended to use ranges instead of specific versions when packaging

to avoid overly constraining dependencies, patches, and conflicts.

For example, depends_on("python@3") denotes a range of versions,

allowing Spack to pick any 3.x.y version for Python, while

depends_on("python@=3.10.1") restricts it to a specific version.

Specific @= versions should only be used in exceptional cases, such

as when the package has a versioning scheme that omits the zero in the

first patch release: 3.1, 3.1.1, 3.1.2. In this example,

the specifier @=3.1 is the correct way to select only the 3.1

version, whereas @3.1 would match all those versions.

Ranges are preferred even if they would only match a single version

defined in the package. This is because users can define custom versions

in packages.yaml that typically include a custom suffix. For example,

if the package defines the version 1.2.3, the specifier @1.2.3

will also match a user-defined version 1.2.3-custom.

spack checksum

If you want to add new versions to a package you’ve already created,

this is automated with the spack checksum command. Here’s an

example for libelf:

$ spack checksum libelf

==> Found 16 versions of libelf.

0.8.13 http://www.mr511.de/software/libelf-0.8.13.tar.gz

0.8.12 http://www.mr511.de/software/libelf-0.8.12.tar.gz

0.8.11 http://www.mr511.de/software/libelf-0.8.11.tar.gz

0.8.10 http://www.mr511.de/software/libelf-0.8.10.tar.gz

0.8.9 http://www.mr511.de/software/libelf-0.8.9.tar.gz

0.8.8 http://www.mr511.de/software/libelf-0.8.8.tar.gz

0.8.7 http://www.mr511.de/software/libelf-0.8.7.tar.gz

0.8.6 http://www.mr511.de/software/libelf-0.8.6.tar.gz

0.8.5 http://www.mr511.de/software/libelf-0.8.5.tar.gz

...

0.5.2 http://www.mr511.de/software/libelf-0.5.2.tar.gz

How many would you like to checksum? (default is 1, q to abort)

This does the same thing that spack create does, but it allows you

to go back and add new versions easily as you need them (e.g., as

they’re released). It fetches the tarballs you ask for and prints out

a list of version commands ready to copy/paste into your package

file:

==> Checksummed new versions of libelf:

version("0.8.13", md5="4136d7b4c04df68b686570afa26988ac")

version("0.8.12", md5="e21f8273d9f5f6d43a59878dc274fec7")

version("0.8.11", md5="e931910b6d100f6caa32239849947fbf")

version("0.8.10", md5="9db4d36c283d9790d8fa7df1f4d7b4d9")

By default, Spack will search for new tarball downloads by scraping

the parent directory of the tarball you gave it. So, if your tarball

is at http://example.com/downloads/foo-1.0.tar.gz, Spack will look

in http://example.com/downloads/ for links to additional versions.

If you need to search another path for download links, you can supply

some extra attributes that control how your package finds new

versions. See the documentation on list_url and

list_depth.

Note

This command assumes that Spack can extrapolate new URLs from an existing URL in the package, and that Spack can find similar URLs on a webpage. If that’s not possible, e.g. if the package’s developers don’t name their tarballs consistently, you’ll need to manually add

versioncalls yourself.For

spack checksumto work, Spack needs to be able toimportyour package in Python. That means it can’t have any syntax errors, or theimportwill fail. Use this once you’ve got your package in working order.

Finding new versions

You’ve already seen the homepage and url package attributes:

1from spack import *

2

3

4class Mpich(Package):

5 """MPICH is a high performance and widely portable implementation of

6 the Message Passing Interface (MPI) standard."""

7 homepage = "http://www.mpich.org"

8 url = "http://www.mpich.org/static/downloads/3.0.4/mpich-3.0.4.tar.gz"

These are class-level attributes used by Spack to show users information about the package, and to determine where to download its source code.

Spack uses the tarball URL to extrapolate where to find other tarballs of the same package (e.g. in spack checksum, but this does not always work. This section covers ways you can tell Spack to find tarballs elsewhere.

list_url

When spack tries to find available versions of packages (e.g. with

spack checksum), it spiders the parent directory

of the tarball in the url attribute. For example, for libelf, the

url is:

url = "http://www.mr511.de/software/libelf-0.8.13.tar.gz"

Here, Spack spiders http://www.mr511.de/software/ to find similar

tarball links and ultimately to make a list of available versions of

libelf.

For many packages, the tarball’s parent directory may be unlistable, or it may not contain any links to source code archives. In fact, many times additional package downloads aren’t even available in the same directory as the download URL.

For these, you can specify a separate list_url indicating the page

to search for tarballs. For example, libdwarf has the homepage as

the list_url, because that is where links to old versions are:

1class Libdwarf(Package):

2 homepage = "http://www.prevanders.net/dwarf.html"

3 url = "http://www.prevanders.net/libdwarf-20130729.tar.gz"

4 list_url = homepage

list_depth

libdwarf and many other packages have a listing of available

versions on a single webpage, but not all do. For example, mpich

has a tarball URL that looks like this:

url = "http://www.mpich.org/static/downloads/3.0.4/mpich-3.0.4.tar.gz"

But its downloads are in many different subdirectories of

http://www.mpich.org/static/downloads/. So, we need to add a

list_url and a list_depth attribute:

1class Mpich(Package):

2 homepage = "http://www.mpich.org"

3 url = "http://www.mpich.org/static/downloads/3.0.4/mpich-3.0.4.tar.gz"

4 list_url = "http://www.mpich.org/static/downloads/"

5 list_depth = 1

By default, Spack only looks at the top-level page available at

list_url. list_depth = 1 tells it to follow up to 1 level of

links from the top-level page. Note that here, this implies 1

level of subdirectories, as the mpich website is structured much

like a filesystem. But list_depth really refers to link depth

when spidering the page.

Fetching from code repositories

For some packages, source code is provided in a Version Control System (VCS) repository rather than in a tarball. Spack can fetch packages from VCS repositories. Currently, Spack supports fetching with Git, Mercurial (hg), Subversion (svn), CVS (cvs), and Go. In all cases, the destination is the standard stage source path.

To fetch a package from a source repository, Spack needs to know which

VCS to use and where to download from. Much like with url, package

authors can specify a class-level git, hg, svn, cvs, or go

attribute containing the correct download location.

Many packages developed with Git have both a Git repository as well as release tarballs available for download. Packages can define both a class-level tarball URL and VCS. For example:

class Trilinos(CMakePackage):

homepage = "https://trilinos.org/"

url = "https://github.com/trilinos/Trilinos/archive/trilinos-release-12-12-1.tar.gz"

git = "https://github.com/trilinos/Trilinos.git"

version("develop", branch="develop")

version("master", branch="master")

version("12.12.1", md5="ecd4606fa332212433c98bf950a69cc7")

version("12.10.1", md5="667333dbd7c0f031d47d7c5511fd0810")

version("12.8.1", "9f37f683ee2b427b5540db8a20ed6b15")

If a package contains both a url and git class-level attribute,

Spack decides which to use based on the arguments to the version()

directive. Versions containing a specific branch, tag, or revision are

assumed to be for VCS download methods, while versions containing a

checksum are assumed to be for URL download methods.

Like url, if a specific version downloads from a different repository

than the default repo, it can be overridden with a version-specific argument.

Note

In order to reduce ambiguity, each package can only have a single VCS

top-level attribute in addition to url. In the rare case that a

package uses multiple VCS, a fetch strategy can be specified for each

version. For example, the rockstar package contains:

class Rockstar(MakefilePackage):

homepage = "https://bitbucket.org/gfcstanford/rockstar"

version("develop", git="https://bitbucket.org/gfcstanford/rockstar.git")

version("yt", hg="https://bitbucket.org/MatthewTurk/rockstar")

Git

Git fetching supports the following parameters to version:

git: URL of the git repository, if different than the class-levelgit.branch: Name of a branch to fetch.tag: Name of a tag to fetch.commit: SHA hash (or prefix) of a commit to fetch.submodules: Also fetch submodules recursively when checking out this repository.submodules_delete: A list of submodules to forcibly delete from the repository after fetching. Useful if a version in the repository has submodules that have disappeared/are no longer accessible.get_full_repo: Ensure the full git history is checked out with all remote branch information. Normally (get_full_repo=False, the default), the git option--depth 1will be used if the version of git and the specified transport protocol support it, and--single-branchwill be used if the version of git supports it.

Only one of tag, branch, or commit can be used at a time.

The destination directory for the clone is the standard stage source path.

- Default branch

To fetch a repository’s default branch:

class Example(Package): git = "https://github.com/example-project/example.git" version("develop")

This download method is untrusted, and is not recommended. Aside from HTTPS, there is no way to verify that the repository has not been compromised, and the commit you get when you install the package likely won’t be the same commit that was used when the package was first written. Additionally, the default branch may change. It is best to at least specify a branch name.

- Branches

To fetch a particular branch, use the

branchparameter:version("experimental", branch="experimental")

This download method is untrusted, and is not recommended. Branches are moving targets, so the commit you get when you install the package likely won’t be the same commit that was used when the package was first written.

- Tags

To fetch from a particular tag, use

taginstead:version("1.0.1", tag="v1.0.1")

This download method is untrusted, and is not recommended. Although tags are generally more stable than branches, Git allows tags to be moved. Many developers use tags to denote rolling releases, and may move the tag when a bug is patched.

- Commits

Finally, to fetch a particular commit, use

commit:version("2014-10-08", commit="9d38cd4e2c94c3cea97d0e2924814acc")

This doesn’t have to be a full hash; you can abbreviate it as you’d expect with git:

version("2014-10-08", commit="9d38cd")

This download method is trusted. It is the recommended way to securely download from a Git repository.

It may be useful to provide a saner version for commits like this, e.g. you might use the date as the version, as done above. Or, if you know the commit at which a release was cut, you can use the release version. It’s up to the package author to decide what makes the most sense. Although you can use the commit hash as the version number, this is not recommended, as it won’t sort properly.

- Submodules

You can supply

submodules=Trueto cause Spack to fetch submodules recursively along with the repository at fetch time.version("1.0.1", tag="v1.0.1", submodules=True)

If a package has needs more fine-grained control over submodules, define

submodulesto be a callable function that takes the package instance as its only argument. The function should return a list of submodules to be fetched.def submodules(package): submodules = [] if "+variant-1" in package.spec: submodules.append("submodule_for_variant_1") if "+variant-2" in package.spec: submodules.append("submodule_for_variant_2") return submodules class MyPackage(Package): version("0.1.0", submodules=submodules)

For more information about git submodules see the manpage of git:

man git-submodule.

GitHub

If a project is hosted on GitHub, any valid Git branch, tag, or hash may be downloaded as a tarball. This is accomplished simply by constructing an appropriate URL. Spack can checksum any package downloaded this way, thereby producing a trusted download. For example, the following downloads a particular hash, and then applies a checksum.

version("1.9.5.1.1", md5="d035e4bc704d136db79b43ab371b27d2",

url="https://www.github.com/jswhit/pyproj/tarball/0be612cc9f972e38b50a90c946a9b353e2ab140f")

Mercurial

Fetching with Mercurial works much like Git, but you

use the hg parameter.

The destination directory is still the standard stage source path.

- Default branch

Add the

hgattribute with norevisionpassed toversion:class Example(Package): hg = "https://bitbucket.org/example-project/example" version("develop")

This download method is untrusted, and is not recommended. As with Git’s default fetching strategy, there is no way to verify the integrity of the download.

- Revisions

To fetch a particular revision, use the

revisionparameter:version("1.0", revision="v1.0")

Unlike

git, which has special parameters for different types of revisions, you can userevisionfor branches, tags, and commits when you fetch with Mercurial. Like Git, fetching specific branches or tags is an untrusted download method, and is not recommended. The recommended fetch strategy is to specify a particular commit hash as the revision.

Subversion

To fetch with subversion, use the svn and revision parameters.

The destination directory will be the standard stage source path.

- Fetching the head

Simply add an

svnparameter to the package:class Example(Package): svn = "https://outreach.scidac.gov/svn/example/trunk" version("develop")

This download method is untrusted, and is not recommended for the same reasons as mentioned above.

- Fetching a revision

To fetch a particular revision, add a

revisionargument to the version directive:version("develop", revision=128)

This download method is untrusted, and is not recommended.

Unfortunately, Subversion has no commit hashing scheme like Git and Mercurial do, so there is no way to guarantee that the download you get is the same as the download used when the package was created. Use at your own risk.

Subversion branches are handled as part of the directory structure, so

you can check out a branch or tag by changing the URL. If you want to

package multiple branches, simply add a svn argument to each

version directive.

CVS

CVS (Concurrent Versions System) is an old centralized version control system. It is a predecessor of Subversion.

To fetch with CVS, use the cvs, branch, and date parameters.

The destination directory will be the standard stage source path.

- Fetching the head

Simply add a

cvsparameter to the package:class Example(Package): cvs = ":pserver:outreach.scidac.gov/cvsroot%module=modulename" version("1.1.2.4")

CVS repository locations are described using an older syntax that is different from today’s ubiquitous URL syntax.

:pserver:denotes the transport method. CVS servers can host multiple repositories (called “modules”) at the same location, and one needs to specify both the server location and the module name to access. Spack combines both into one string using the%module=modulenamesuffix shown above.This download method is untrusted.

- Fetching a date

Versions in CVS are commonly specified by date. To fetch a particular branch or date, add a

branchand/ordateargument to the version directive:version("2021.4.22", branch="branchname", date="2021-04-22")

Unfortunately, CVS does not identify repository-wide commits via a revision or hash like Subversion, Git, or Mercurial do. This makes it impossible to specify an exact commit to check out.

CVS has more features, but since CVS is rarely used these days, Spack does not support all of them.

Go

Go isn’t a VCS, it is a programming language with a builtin command, go get, that fetches packages and their dependencies automatically. The destination directory will be the standard stage source path.

This strategy can clone a Git repository, or download from another source location. For example:

class ThePlatinumSearcher(Package):

homepage = "https://github.com/monochromegane/the_platinum_searcher"

go = "github.com/monochromegane/the_platinum_searcher/..."

version("head")

Go cannot be used to fetch a particular commit or branch, it always downloads the head of the repository. This download method is untrusted, and is not recommended. Use another fetch strategy whenever possible.

.. _variants:

Variants

Many software packages can be configured to enable optional

features, which often come at the expense of additional dependencies or

longer build times. To be flexible enough and support a wide variety of

use cases, Spack allows you to expose to the end-user the ability to choose

which features should be activated in a package at the time it is installed.

The mechanism to be employed is the spack.directives.variant() directive.

Boolean variants

In their simplest form variants are boolean options specified at the package level:

class Hdf5(AutotoolsPackage): ... variant( "shared", default=True, description="Builds a shared version of the library" )

with a default value and a description of their meaning / use in the package.

Variants can be tested in any context where a spec constraint is expected.

In the example above the shared variant is tied to the build of shared dynamic

libraries. To pass the right option at configure time we can branch depending on

its value:

def configure_args(self): ... if self.spec.satisfies("+shared"): extra_args.append("--enable-shared") else: extra_args.append("--disable-shared") extra_args.append("--enable-static-exec")

As explained in Variants the constraint +shared means

that the boolean variant is set to True, while ~shared means it is set

to False.

Another common example is the optional activation of an extra dependency

which requires to use the variant in the when argument of

spack.directives.depends_on():

class Hdf5(AutotoolsPackage): ... variant("szip", default=False, description="Enable szip support") depends_on("szip", when="+szip")

as shown in the snippet above where szip is modeled to be an optional

dependency of hdf5.

Multi-valued variants

If need be, Spack can go beyond Boolean variants and permit an arbitrary

number of allowed values. This might be useful when modeling

options that are tightly related to each other.

The values in this case are passed to the spack.directives.variant()

directive as a tuple:

class Blis(Package): ... variant( "threads", default="none", description="Multithreading support", values=("pthreads", "openmp", "none"), multi=False )

In the example above the argument multi is set to False to indicate

that only one among all the variant values can be active at any time. This

constraint is enforced by the parser and an error is emitted if a user

specifies two or more values at the same time:

$ spack spec blis threads=openmp,pthreads Input spec -------------------------------- blis threads=openmp,pthreads Concretized -------------------------------- ==> Error: multiple values are not allowed for variant "threads"

Another useful note is that Python’s None is not allowed as a default value

and therefore it should not be used to denote that no feature was selected.

Users should instead select another value, like "none", and handle it explicitly

within the package recipe if need be:

if self.spec.variants["threads"].value == "none": options.append("--no-threads")

In cases where multiple values can be selected at the same time multi should

be set to True:

class Gcc(AutotoolsPackage): ... variant( "languages", default="c,c++,fortran", values=("ada", "brig", "c", "c++", "fortran", "go", "java", "jit", "lto", "objc", "obj-c++"), multi=True, description="Compilers and runtime libraries to build" )

Within a package recipe a multi-valued variant is tested using a key=value syntax:

if spec.satisfies("languages=jit"): options.append("--enable-host-shared")

Complex validation logic for variant values

To cover complex use cases, the spack.directives.variant() directive

could accept as the values argument a full-fledged object which has

default and other arguments of the directive embedded as attributes.

An example, already implemented in Spack’s core, is spack.variant.DisjointSetsOfValues.

This class is used to implement a few convenience functions, like

spack.variant.any_combination_of():

class Adios(AutotoolsPackage): ... variant( "staging", values=any_combination_of("flexpath", "dataspaces"), description="Enable dataspaces and/or flexpath staging transports" )

that allows any combination of the specified values, and also allows the

user to specify "none" (as a string) to choose none of them.

The objects returned by these functions can be modified at will by chaining

method calls to change the default value, customize the error message or

other similar operations:

class Mvapich2(AutotoolsPackage): ... variant( "process_managers", description="List of the process managers to activate", values=disjoint_sets( ("auto",), ("slurm",), ("hydra", "gforker", "remshell") ).prohibit_empty_set().with_error( "'slurm' or 'auto' cannot be activated along with " "other process managers" ).with_default("auto").with_non_feature_values("auto"), )

Conditional Possible Values

There are cases where a variant may take multiple values, and the list of allowed values expand over time. Think for instance at the C++ standard with which we might compile Boost, which can take one of multiple possible values with the latest standards only available from a certain version on.

To model a similar situation we can use conditional possible values in the variant declaration:

variant(

"cxxstd", default="98",

values=(

"98", "11", "14",

# C++17 is not supported by Boost < 1.63.0.

conditional("17", when="@1.63.0:"),

# C++20/2a is not support by Boost < 1.73.0

conditional("2a", "2b", when="@1.73.0:")

),

multi=False,

description="Use the specified C++ standard when building.",

)

The snippet above allows 98, 11 and 14 as unconditional possible values for the

cxxstd variant, while 17 requires a version greater or equal to 1.63.0

and both 2a and 2b require a version greater or equal to 1.73.0.

Conditional Variants

The variant directive accepts a when clause. The variant will only

be present on specs that otherwise satisfy the spec listed as the

when clause. For example, the following class has a variant

bar when it is at version 2.0 or higher.

class Foo(Package):

...

variant("bar", default=False, when="@2.0:", description="help message")

The when clause follows the same syntax and accepts the same

values as the when argument of

spack.directives.depends_on()

Sticky Variants

The variant directive can be marked as sticky by setting to True the

corresponding argument:

variant("bar", default=False, sticky=True)

A sticky variant differs from a regular one in that it is always set

to either:

An explicit value appearing in a spec literal or

Its default value

The concretizer thus is not free to pick an alternate value to work around conflicts, but will error out instead. Setting this property on a variant is useful in cases where the variant allows some dangerous or controversial options (e.g. using unsupported versions of a compiler for a library) and the packager wants to ensure that allowing these options is done on purpose by the user, rather than automatically by the solver.

Overriding Variants

Packages may override variants for several reasons, most often to change the default from a variant defined in a parent class or to change the conditions under which a variant is present on the spec.

When a variant is defined multiple times, whether in the same package

file or in a subclass and a superclass, the last definition is used

for all attributes except for the when clauses. The when

clauses are accumulated through all invocations, and the variant is

present on the spec if any of the accumulated conditions are

satisfied.

For example, consider the following package:

class Foo(Package):

...

variant("bar", default=False, when="@1.0", description="help1")

variant("bar", default=True, when="platform=darwin", description="help2")

...

This package foo has a variant bar when the spec satisfies

either @1.0 or platform=darwin, but not for other platforms at

other versions. The default for this variant, when it is present, is

always True, regardless of which condition of the variant is

satisfied. This allows packages to override variants in packages or

build system classes from which they inherit, by modifying the variant

values without modifying the when clause. It also allows a package

to implement or semantics for a variant when clause by

duplicating the variant definition.

Resources (expanding extra tarballs)

Some packages (most notably compilers) provide optional features if additional

resources are expanded within their source tree before building. In Spack it is

possible to describe such a need with the resource directive :

resource( name="cargo", git="https://github.com/rust-lang/cargo.git", tag="0.10.0", destination="cargo" )

Based on the keywords present among the arguments the appropriate FetchStrategy

will be used for the resource. The keyword destination is relative to the source

root of the package and should point to where the resource is to be expanded.

Licensed software

In order to install licensed software, Spack needs to know a few more details about a package. The following class attributes should be defined.

license_required

Boolean. If set to True, this software requires a license. If set to

False, all of the following attributes will be ignored. Defaults to

False.

license_comment

String. Contains the symbol used by the license manager to denote a comment.

Defaults to #.

license_files

List of strings. These are files that the software searches for when looking for a license. All file paths must be relative to the installation directory. More complex packages like Intel may require multiple licenses for individual components. Defaults to the empty list.

license_vars

List of strings. Environment variables that can be set to tell the software where to look for a license if it is not in the usual location. Defaults to the empty list.

license_url

String. A URL pointing to license setup instructions for the software. Defaults to the empty string.

For example, let’s take a look at the package for the PGI compilers.

# Licensing

license_required = True

license_comment = "#"

license_files = ["license.dat"]

license_vars = ["PGROUPD_LICENSE_FILE", "LM_LICENSE_FILE"]

license_url = "http://www.pgroup.com/doc/pgiinstall.pdf"

As you can see, PGI requires a license. Its license manager, FlexNet, uses

the # symbol to denote a comment. It expects the license file to be

named license.dat and to be located directly in the installation prefix.

If you would like the installation file to be located elsewhere, simply set

PGROUPD_LICENSE_FILE or LM_LICENSE_FILE after installation. For

further instructions on installation and licensing, see the URL provided.

Let’s walk through a sample PGI installation to see exactly what Spack is

and isn’t capable of. Since PGI does not provide a download URL, it must

be downloaded manually. It can either be added to a mirror or located in

the current directory when spack install pgi is run. See Mirrors (mirrors.yaml)

for instructions on setting up a mirror.

After running spack install pgi, the first thing that will happen is

Spack will create a global license file located at

$SPACK_ROOT/etc/spack/licenses/pgi/license.dat. It will then open up the

file using your favorite editor. It will look like

this:

# A license is required to use pgi.

#

# The recommended solution is to store your license key in this global

# license file. After installation, the following symlink(s) will be

# added to point to this file (relative to the installation prefix):

#

# license.dat

#

# Alternatively, use one of the following environment variable(s):

#

# PGROUPD_LICENSE_FILE

# LM_LICENSE_FILE

#

# If you choose to store your license in a non-standard location, you may

# set one of these variable(s) to the full pathname to the license file, or

# port@host if you store your license keys on a dedicated license server.

# You will likely want to set this variable in a module file so that it

# gets loaded every time someone tries to use pgi.

#

# For further information on how to acquire a license, please refer to:

#

# http://www.pgroup.com/doc/pgiinstall.pdf

#

# You may enter your license below.

You can add your license directly to this file, or tell FlexNet to use a license stored on a separate license server. Here is an example that points to a license server called licman1:

SERVER licman1.mcs.anl.gov 00163eb7fba5 27200

USE_SERVER

If your package requires the license to install, you can reference the

location of this global license using self.global_license_file.

After installation, symlinks for all of the files given in

license_files will be created, pointing to this global license.

If you install a different version or variant of the package, Spack

will automatically detect and reuse the already existing global license.

If the software you are trying to package doesn’t rely on license files, Spack will print a warning message, letting the user know that they need to set an environment variable or pointing them to installation documentation.

Patches

Depending on the host architecture, package version, known bugs, or other issues, you may need to patch your software to get it to build correctly. Like many other package systems, spack allows you to store patches alongside your package files and apply them to source code after it’s downloaded.

patch

You can specify patches in your package file with the patch()

directive. patch looks like this:

class Mvapich2(Package):

...

patch("ad_lustre_rwcontig_open_source.patch", when="@1.9:")

The first argument can be either a URL or a filename. It specifies a patch file that should be applied to your source. If the patch you supply is a filename, then the patch needs to live within the spack source tree. For example, the patch above lives in a directory structure like this:

$SPACK_ROOT/var/spack/repos/builtin/packages/

mvapich2/

package.py

ad_lustre_rwcontig_open_source.patch

If you supply a URL instead of a filename, you need to supply a

sha256 checksum, like this:

patch("http://www.nwchem-sw.org/images/Tddft_mxvec20.patch",

sha256="252c0af58be3d90e5dc5e0d16658434c9efa5d20a5df6c10bf72c2d77f780866")

Spack includes the hashes of patches in its versioning information, so

that the same package with different patches applied will have different

hash identifiers. To ensure that the hashing scheme is consistent, you

must use a sha256 checksum for the patch. Patches will be fetched

from their URLs, checked, and applied to your source code. You can use

the GNU utils sha256sum or the macOS shasum -a 256 commands to

generate a checksum for a patch file.

Spack can also handle compressed patches. If you use these, Spack needs

a little more help. Specifically, it needs two checksums: the

sha256 of the patch and archive_sha256 for the compressed

archive. archive_sha256 helps Spack ensure that the downloaded

file is not corrupted or malicious, before running it through a tool like

tar or zip. The sha256 of the patch is still required so

that it can be included in specs. Providing it in the package file

ensures that Spack won’t have to download and decompress patches it won’t

end up using at install time. Both the archive and patch checksum are

checked when patch archives are downloaded.

patch("http://www.nwchem-sw.org/images/Tddft_mxvec20.patch.gz",

sha256="252c0af58be3d90e5dc5e0d16658434c9efa5d20a5df6c10bf72c2d77f780866",

archive_sha256="4e8092a161ec6c3a1b5253176fcf33ce7ba23ee2ff27c75dbced589dabacd06e")

patch keyword arguments are described below.

sha256, archive_sha256

Hashes of downloaded patch and compressed archive, respectively. Only needed for patches fetched from URLs.

when

If supplied, this is a spec that tells spack when to apply

the patch. If the installed package spec matches this spec, the

patch will be applied. In our example above, the patch is applied

when mvapich is at version 1.9 or higher.

level

This tells spack how to run the patch command. By default,

the level is 1 and spack runs patch -p 1. If level is 2,

spack will run patch -p 2, and so on.

A lot of people are confused by level, so here’s a primer. If you look in your patch file, you may see something like this:

1--- a/src/mpi/romio/adio/ad_lustre/ad_lustre_rwcontig.c 2013-12-10 12:05:44.806417000 -0800

2+++ b/src/mpi/romio/adio/ad_lustre/ad_lustre_rwcontig.c 2013-12-10 11:53:03.295622000 -0800

3@@ -8,7 +8,7 @@

4 * Copyright (C) 2008 Sun Microsystems, Lustre group

5 \*/

6

7-#define _XOPEN_SOURCE 600

8+//#define _XOPEN_SOURCE 600

9 #include <stdlib.h>

10 #include <malloc.h>

11 #include "ad_lustre.h"

Lines 1-2 show paths with synthetic a/ and b/ prefixes. These

are placeholders for the two mvapich2 source directories that

diff compared when it created the patch file. This is git’s

default behavior when creating patch files, but other programs may

behave differently.

-p1 strips off the first level of the prefix in both paths,

allowing the patch to be applied from the root of an expanded mvapich2

archive. If you set level to 2, it would strip off src, and

so on.

It’s generally easier to just structure your patch file so that it

applies cleanly with -p1, but if you’re using a patch you didn’t

create yourself, level can be handy.

working_dir

This tells spack where to run the patch command. By default,

the working directory is the source path of the stage (.).

However, sometimes patches are made with respect to a subdirectory

and this is where the working directory comes in handy. Internally,

the working directory is given to patch via the -d option.

Let’s take the example patch from above and assume for some reason,

it can only be downloaded in the following form:

1--- a/romio/adio/ad_lustre/ad_lustre_rwcontig.c 2013-12-10 12:05:44.806417000 -0800

2+++ b/romio/adio/ad_lustre/ad_lustre_rwcontig.c 2013-12-10 11:53:03.295622000 -0800

3@@ -8,7 +8,7 @@

4 * Copyright (C) 2008 Sun Microsystems, Lustre group

5 \*/

6

7-#define _XOPEN_SOURCE 600

8+//#define _XOPEN_SOURCE 600

9 #include <stdlib.h>

10 #include <malloc.h>

11 #include "ad_lustre.h"

Hence, the patch needs to applied in the src/mpi subdirectory, and the

working_dir="src/mpi" option would exactly do that.

Patch functions

In addition to supplying patch files, you can write a custom function

to patch a package’s source. For example, the py-pyside package

contains some custom code for tweaking the way the PySide build

handles RPATH:

1 def patch(self):

2 """Undo PySide RPATH handling and add Spack RPATH."""

3 # Figure out the special RPATH

4 rpath = self.rpath

5 rpath.append(os.path.join(python_platlib, "PySide"))

6

7 # Fix subprocess.mswindows check for Python 3.5

8 # https://github.com/pyside/pyside-setup/pull/55

9 filter_file(

10 "^if subprocess.mswindows:",

11 'mswindows = (sys.platform == "win32")\r\nif mswindows:',

12 "popenasync.py",

13 )

14 filter_file("^ if subprocess.mswindows:", " if mswindows:", "popenasync.py")

15

16 # Remove check for python version because the above patch adds support for newer versions

17 filter_file("^check_allowed_python_version()", "", "setup.py")

18

19 # Add Spack's standard CMake args to the sub-builds.

20 # They're called BY setup.py so we have to patch it.

21 filter_file(

22 r"OPTION_CMAKE,",

23 r"OPTION_CMAKE, "

24 + (

25 '"-DCMAKE_INSTALL_RPATH_USE_LINK_PATH=FALSE", '

26 '"-DCMAKE_INSTALL_RPATH=%s",' % ":".join(rpath)

27 ),

28 "setup.py",

29 )

30

31 # PySide tries to patch ELF files to remove RPATHs

32 # Disable this and go with the one we set.

33 if self.spec.satisfies("@1.2.4:"):

34 rpath_file = "setup.py"

35 else:

36 rpath_file = "pyside_postinstall.py"

37

38 filter_file(r"(^\s*)(rpath_cmd\(.*\))", r"\1#\2", rpath_file)

A patch function, if present, will be run after patch files are

applied and before install() is run.

You could put this logic in install(), but putting it in a patch

function gives you some benefits. First, spack ensures that the

patch() function is run once per code checkout. That means that

if you run install, hit ctrl-C, and run install again, the code in the

patch function is only run once. Also, you can tell Spack to run only

the patching part of the build using the spack patch command.

Dependency patching

So far we’ve covered how the patch directive can be used by a package

to patch its own source code. Packages can also specify patches to be

applied to their dependencies, if they require special modifications. As

with all packages in Spack, a patched dependency library can coexist with

other versions of that library. See the section on depends_on for more details.

Inspecting patches

If you want to better understand the patches that Spack applies to your

packages, you can do that using spack spec, spack find, and other

query commands. Let’s look at m4. If you run spack spec m4, you

can see the patches that would be applied to m4:

$ spack spec m4

Input spec

--------------------------------

m4

Concretized

--------------------------------

m4@1.4.18%apple-clang@9.0.0 patches=3877ab548f88597ab2327a2230ee048d2d07ace1062efe81fc92e91b7f39cd00,c0a408fbffb7255fcc75e26bd8edab116fc81d216bfd18b473668b7739a4158e,fc9b61654a3ba1a8d6cd78ce087e7c96366c290bc8d2c299f09828d793b853c8 +sigsegv arch=darwin-highsierra-x86_64

^libsigsegv@2.11%apple-clang@9.0.0 arch=darwin-highsierra-x86_64

You can also see patches that have been applied to installed packages

with spack find -v:

$ spack find -v m4

==> 1 installed package

-- darwin-highsierra-x86_64 / apple-clang@9.0.0 -----------------

m4@1.4.18 patches=3877ab548f88597ab2327a2230ee048d2d07ace1062efe81fc92e91b7f39cd00,c0a408fbffb7255fcc75e26bd8edab116fc81d216bfd18b473668b7739a4158e,fc9b61654a3ba1a8d6cd78ce087e7c96366c290bc8d2c299f09828d793b853c8 +sigsegv

In both cases above, you can see that the patches’ sha256 hashes are stored on the spec as a variant. As mentioned above, this means that you can have multiple, differently-patched versions of a package installed at once.

You can look up a patch by its sha256 hash (or a short version of it)

using the spack resource show command:

$ spack resource show 3877ab54

3877ab548f88597ab2327a2230ee048d2d07ace1062efe81fc92e91b7f39cd00

path: /home/spackuser/src/spack/var/spack/repos/builtin/packages/m4/gnulib-pgi.patch

applies to: builtin.m4

spack resource show looks up downloadable resources from package

files by hash and prints out information about them. Above, we see that

the 3877ab54 patch applies to the m4 package. The output also

tells us where to find the patch.

Things get more interesting if you want to know about dependency

patches. For example, when dealii is built with boost@1.68.0, it

has to patch boost to work correctly. If you didn’t know this, you might

wonder where the extra boost patches are coming from:

$ spack spec dealii ^boost@1.68.0 ^hdf5+fortran | grep "\^boost"

^boost@1.68.0

^boost@1.68.0%apple-clang@9.0.0+atomic+chrono~clanglibcpp cxxstd=default +date_time~debug+exception+filesystem+graph~icu+iostreams+locale+log+math~mpi+multithreaded~numpy patches=2ab6c72d03dec6a4ae20220a9dfd5c8c572c5294252155b85c6874d97c323199,b37164268f34f7133cbc9a4066ae98fda08adf51e1172223f6a969909216870f ~pic+program_options~python+random+regex+serialization+shared+signals~singlethreaded+system~taggedlayout+test+thread+timer~versionedlayout+wave arch=darwin-highsierra-x86_64

$ spack resource show b37164268

b37164268f34f7133cbc9a4066ae98fda08adf51e1172223f6a969909216870f

path: /home/spackuser/src/spack/var/spack/repos/builtin/packages/dealii/boost_1.68.0.patch

applies to: builtin.boost

patched by: builtin.dealii

Here you can see that the patch is applied to boost by dealii,

and that it lives in dealii’s directory in Spack’s builtin

package repository.

Handling RPATHs